What Is a Face?

Published in Fake AI by Meatspace Press 2021, edited by Frederike Kaltheuner.

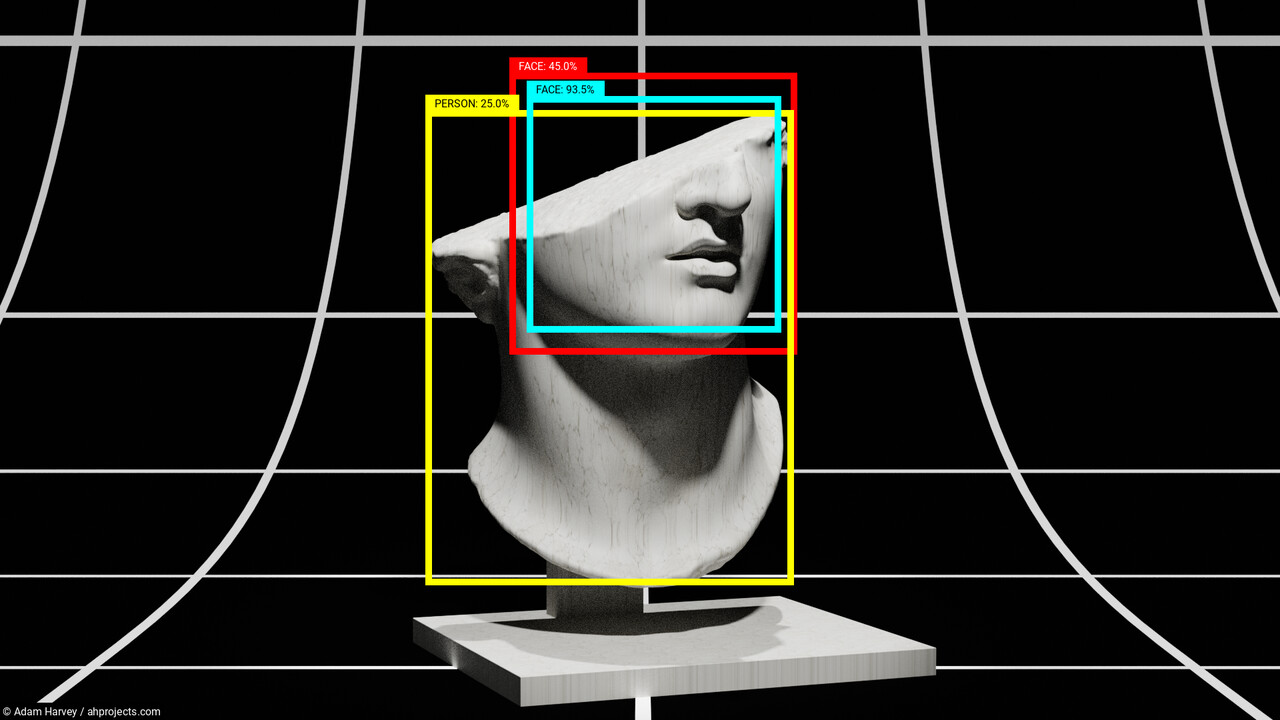

Graphic © Adam Harvey 2021. Based on public domain 3D model of “Fragmentary Colossal Head of a Youth” by Scan the World.

Until the 20th century, faces could only be seen through the human perceptual system. As face recognition and face analysis technologies developed throughout the last century, a new optical system emerged that “sees” faces through digital sensors and algorithms. Today, computer vision has given rise to new ways of looking at each other that build on 19th century conceptions of photography, but implement 21st century perceptual technologies. Of course, seeing faces through multi-spectral modalities at superhuman resolutions is not the same as seeing a face with one’s own eyes. Clarifying ambiguities and creating new lexicons around face recognition are therefore important steps in regulating biometric surveillance technologies. This effort begins with exploring answers to a seemingly simple question: what is a face?

The word “face” generally refers to the front-most region of the uppermost part of the human body. However, there are no dictionary definitions that strictly define where the face begins or where it ends. Moreover, to “see” hinges on an anthropocentric definition of vision which assumes a visible spectrum of reflected light received through the retina, visual cortex, and fusiform gyrus that is ultimately understood as a face in the mind of the observer. Early biometric face analysis systems built on this definition by combining the human perceptual system with statistical probabilities. But the “face” in a face recognition system takes on new meanings through the faceless computational logic of computer vision.

Computer vision requires strict definitions. Face detection algorithms define faces with exactness, although each algorithm may define these parameters in different ways. For example, in 2001, Paul Viola and Michael Jones1 introduced the first widely-used face detection algorithm that defined a frontal face within a square region using a 24 × 24 pixel grayscale definition. The next widely used face detection algorithm, based on Dalal and Triggs’ Histogram of Oriented Gradients (HoG) algorithm,2 was later implemented in dlib3 and looked for faces at 80 × 80 pixels in grayscale. Though in both cases images could be upscaled or downscaled, neither performed well at resolutions below 40 × 40 pixels. Recently, convolutional neural network research has redefined the technical meaning of face. Algorithms can now reliably detect faces smaller than 20 pixels in height,4 while new face recognition datasets, such as TinyFace, aim to develop low-resolution face recognition algorithms that can recognize an individual at around 20 × 16 pixels.5

Other face definitions include the ISO/IEC 19794-5 biometric passport guidance for using a minimum of 120 interocular (pupil to pupil) pixels. But in 2017, America’s National Institute of Science and Technology (NIST) published a recommendation for using between 70-300 interocular pixels. However, NIST also noted that “the best accuracy is obtained from faces appearing in turnstile video clips with mean minimum and maximum interocular distances of 20 and 55 pixels respectively.”6 More recently, Clearview, a company that provides face recognition technologies to law enforcement agencies, claimed to use a 110 × 110 pixel definition of a face.7 These wide-ranging technical definitions are already being challenged by the face masks adopted during the Covid-19 pandemic, which have led to significant drops in face recognition performance.

The face is not a single biometric but includes many sub-biometrics, each with varying levels of identification possibilities. During the 2020 International Face Performance Conference, NIST proposed expanding the concept of a biometric face template to include an additional template for the periocular region,8 the region not covered by a medical mask. This brings into question what exactly “face” recognition is if it is only analyzing a sub-region, or sub-biometric, of the face. What would periocular face recognition be called in the profile view, if not monocular recognition? The distinction between face recognition and iris recognition is becoming increasingly thin.

At a high enough resolution, everything becomes unique. Conversely, at a low enough resolution everything looks basically the same. In Defeating Image Obfuscation with Deep Learning, Macpherson et al. found that face recognition performance dropped between 15-20% as the image resolution decreased from 224 × 224 down to 14 × 14 pixels, using rank-1 and rank-5 metrics respectively, with a dataset size of only 530 people. But as the number of identities in a matching database increases, so do the inaccuracies. Million-scale recognition at 14 × 14 pixels is simply not possible.

Limiting the resolution of biometric systems used for mass surveillance could contribute profoundly towards preventing their misuse, but such regulation requires unfolding the technical language further and making room for legitimate uses, such as consumer applications or investigative journalism, while simultaneously blunting the capabilities of authoritarian police or rogue surveillance companies. Calls to ban face recognition would be better served by replacing ambiguous terminology with more technically precise language about the resolution, region of interest, spectral bandwidth, and quantity of biometric samples. If law enforcement agencies were restricted to using low-resolution 14 × 14 pixel face images to search against a database of millions, the list of potential matches would likely include thousands or tens of thousands of faces, making the software virtually useless, given the operator would still have to manually sort through thousands of images. In effect this would defeat the purpose of face recognition, which is to compress the search space into a human-scale dataset in order to find the needle in the haystack. Severely restricting the resolution of a face recognition system means that searching for a needle would only yield more haystacks.

In 2020, a project by researchers at Duke University, called PULSE, showed that the restricted perceptual space of a 16 × 16 pixel face image allows for wildly different identities to all downscale to perceptually indifferent images.9 The project faced criticism because it also showed that up-sampling a low-resolution image of Barack Obama produced a high-resolution image of a light-skinned face. Nevertheless, the work confirmed the technical reality that two faces can appear identical at 16 × 16 pixels, but resemble completely different identities at 1024 × 1024 pixels. As image resolution decreases so too does the dimensionality of identity.

Unless a computational definition of “face” can be appended to the current language around biometrics, the unchallenged ambiguities between a 162 pixel face and a 10,242 pixel face will likely be decided by the industries or agencies that stand to benefit most from the increasingly invasive acquisition of biometric data. Moreover, better regulatory definitions of “face” that include specific limits on the resolution of face imagery could help limit the potential for face recognition technologies to be used for mass surveillance. The monitoring and tracking of our every public move—at meetings, in classrooms, at sporting events, and even through car windows—is no longer limited to law enforcement agencies. Many individuals are already scraping the internet in order to create their own face recognition systems.

A better definition of “face” in recognition technology should not be limited to only sampling resolution, but also include the spectral capacity, duration of capture, and the boundaries of where a face begins and where it ends. As the combinatory resolution of a face decreases, so does its potential for mass surveillance. Limiting resolution means limiting power and its abuses.

© Adam Harvey 2021. All Rights Reserved.

Notes:

- Commissioned by Frederike Kaltheuner for Fake AI published by Meatspace Press 2021

- Written in January 2021, published in December 2021

- The text in this version differs slightly from the Fake AI publication and includes several clarifications (eg changing “if not ocular recognition” to “if not monocular recognition”)

Viola, P. & Jones, M.J. (2004) Robust Real-Time Face Detection. International Journal of Computer Vision 57, 137–154. DOI: 10.1023/B:VISI.0000013087.49260.fb ↩︎

Dalal, N. & Triggs, B. (2005) Histograms of oriented gradients for human detection, IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 886-893 vol.1. DOI: 10.1109/CVPR.2005.177 ↩︎

Hu, P. & Ramanan, D. (2017) Finding Tiny Faces, IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1522-1530. DOI: 10.1109/CVPR.2017.166 ↩︎

https://www.nist.gov/programs-projects/face-recognition-vendor-test-frvt-ongoing ↩︎

Clearview AI’s founder Hoan Ton-That speaks out [Interview], https://www.youtube.com/watch?v=q-1bR3P9RAw ↩︎

https://www.nist.gov/video/international-face-performance-conference-ifpc-2020-day-1-part-1 ↩︎