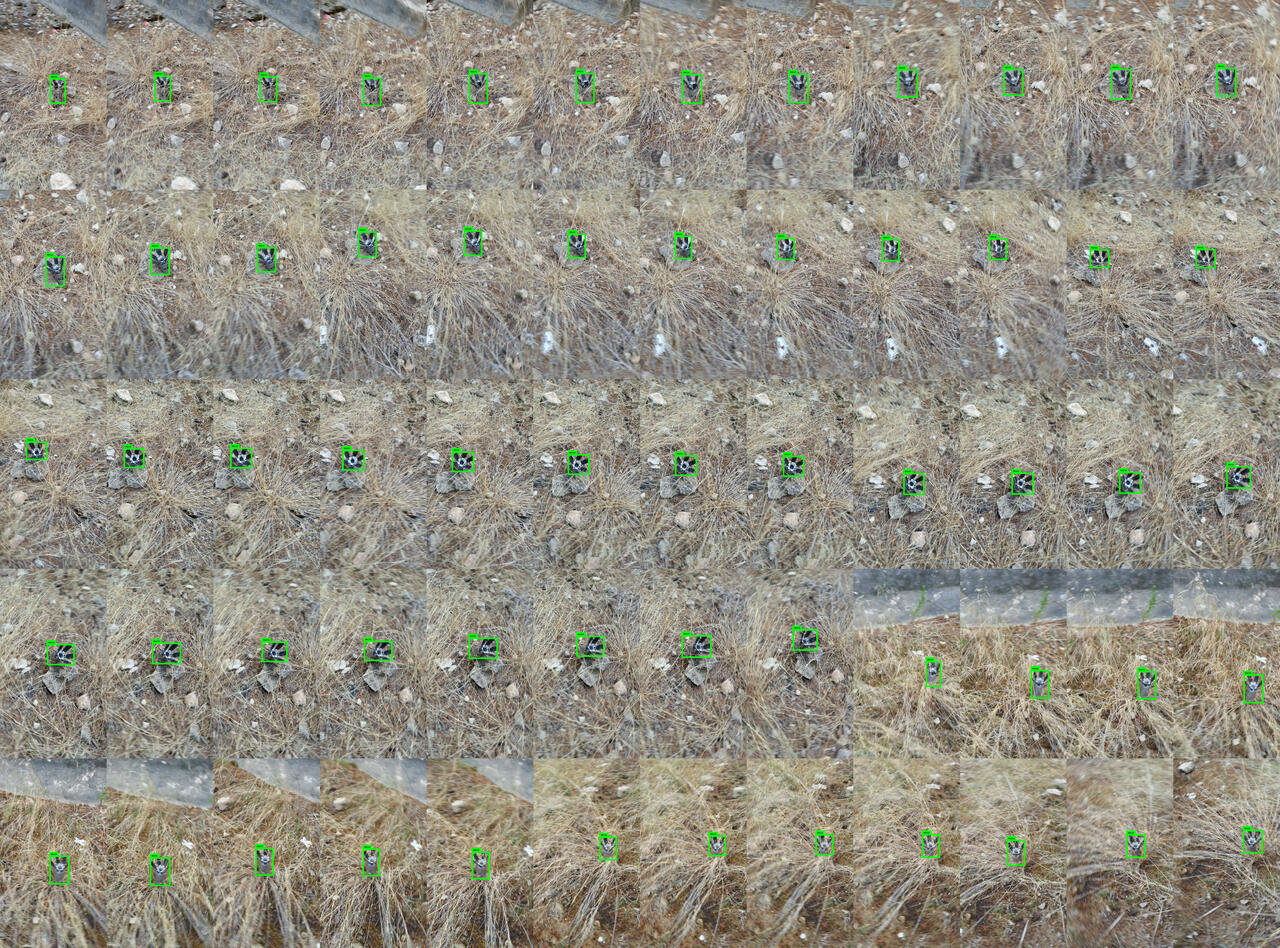

Object detection results on the 9N235/9N210 submunition. © 2022 Adam Harvey

VFRAME #

For the latest results on this research visit –> https://vframe.io/9n235/

VFRAME.io (Visual Forensics and Metadata Extraction) is a computer vision toolkit designed for human rights researchers. It aims to bridge the gap between state-of-the-art artificial intelligence used in the commercial sector and make it accessible and tailored to the needs of human rights researchers and investigative journalists working with large video or image datasets. VFRAME is under active development and was most recently presented at the Geneva International Center for Humanitarian Demining (GICHD) Mine Action Technology Workshop in November 2021.

Visit VFRAME.ioVFRAME began as an exploration in 2017 with researchers at the Syrian Archive in Berlin to determine if computer vision coud be applied to their archive. During the last 4 years, the VFRAME project has developed several techniques to carry forward this goal, resulting in the latest research on detecting a submunition appearing often in conflict zone documentation from Ukraine. Read more about it here https://vframe.io/9n235/

Below is an example the latest research using photography, photogrammetry, 3D-rendering, 3D-printing, and convolutional neural networks to build a high-performance submunition detector.

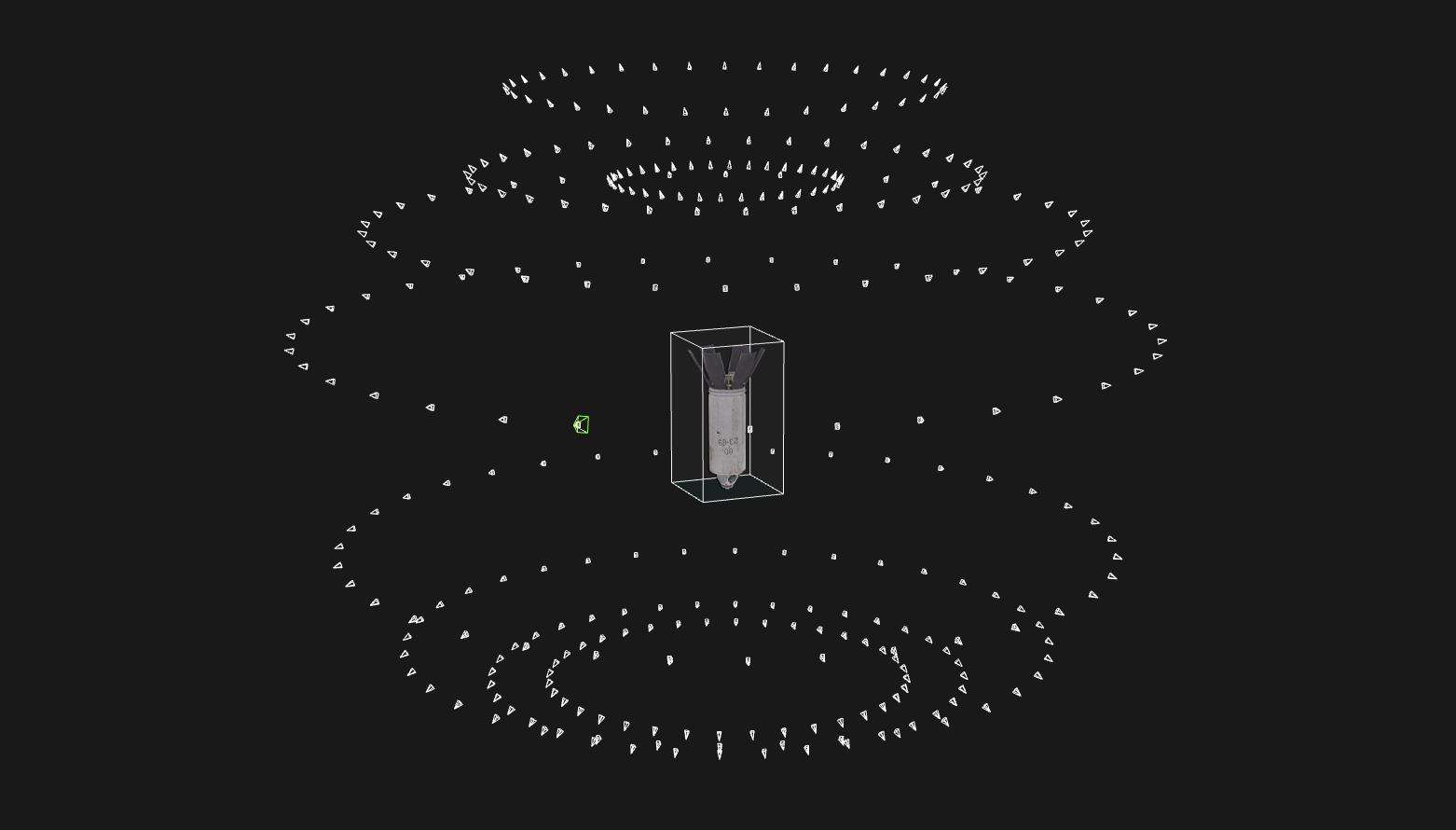

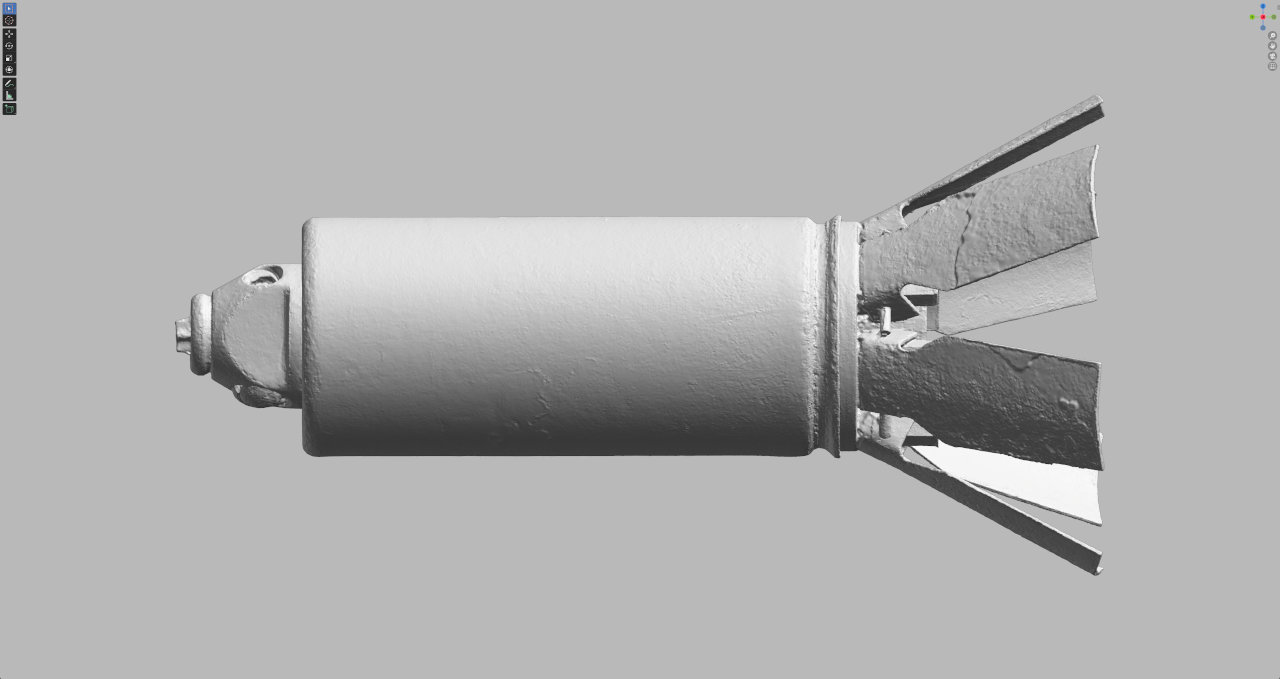

Photogrammetry scan of the 9N235 submunition by VFRAME in collaboration with Tech 4 Tracing. Graphic: © Adam Harvey

3D visualization of finalized 9N210/9N235 photogrammetry model. © Adam Harvey

3D visualization of finalized 9N210/9N235 photogrammetry model. © Adam Harvey

Benchmark images created for the 9N235 detector using a 3D-printed replica © Adam Harvey